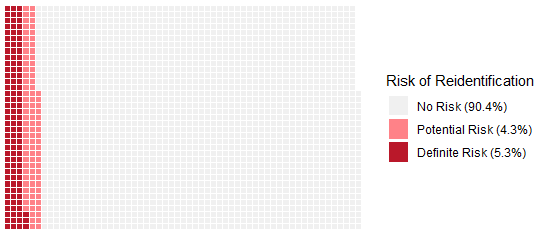

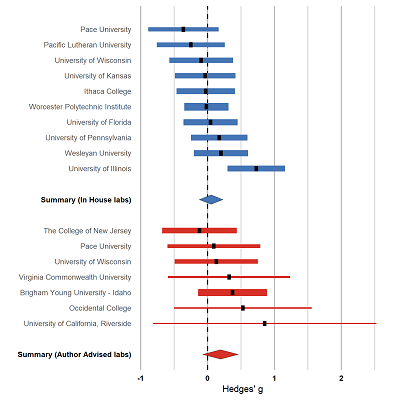

Our prior work demonstrated that 5-10% of open datasets contain potential privacy violations, so we developed a tool to help. DataCheck can automatically scan a dataset and flag 14 types of common privacy violations before a researcher makes their data public. Available as both a Web app and R package, the tool runs locally so there is no risk of exposing participant data during the scan. It was validated on both live and simulated datasets to be >98% accurate.